A Model of Human Value in the Age of Artificial Intelligence

- Albert Durig

- Aug 18, 2025

- 9 min read

Updated: Aug 21, 2025

Drawing on years of work with the human dimension in business and building on the explorations of the preceding information, I propose a model for understanding how human value emerges, endures, and functions in the age of artificial intelligence. This model is not merely conceptual but intended as a framework for practical navigation in a world where machines of staggering intelligence coexist with human beings.

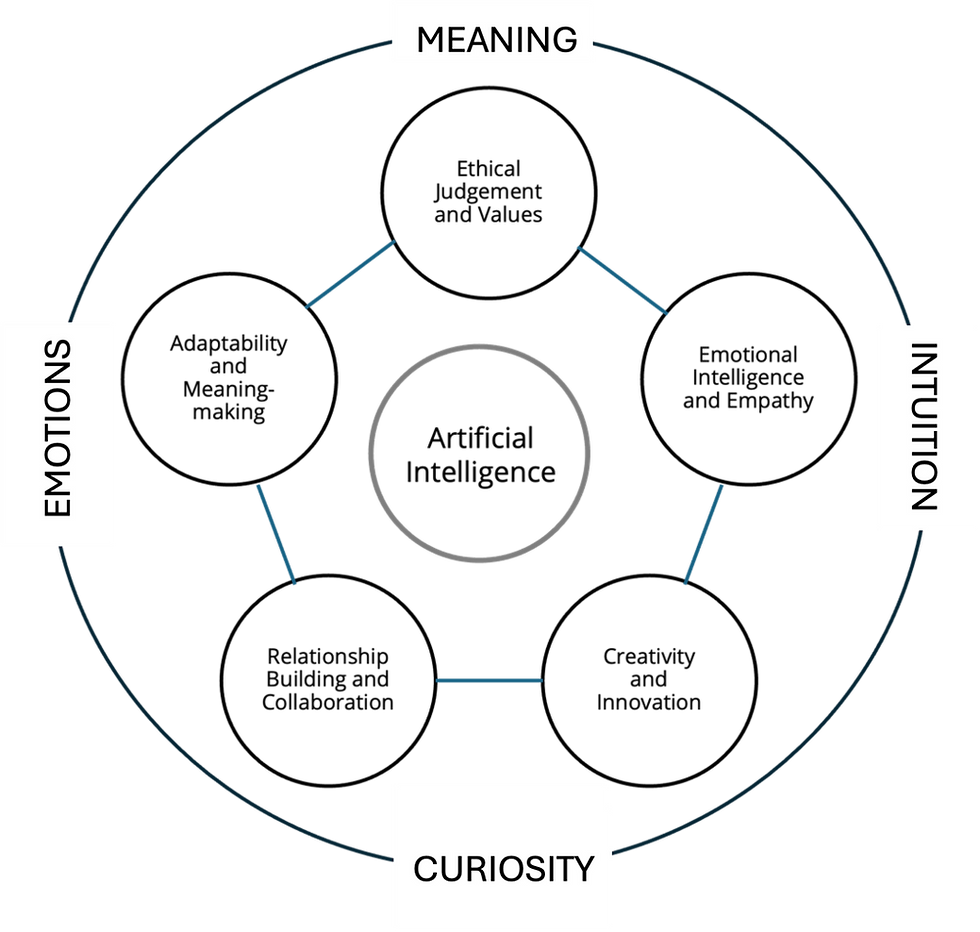

At its center lies AI, positioned deliberately as an entity to be contained, controlled, and leveraged—not celebrated as sovereign. Surrounding AI is the human dimension, expressed through five essential value propositions unique to people: (1) Emotional Intelligence and Empathy, (2) Creativity and Innovation, (3) Ethical Judgment and Values, (4) Relationship Building and Collaboration, and (5) Adaptability and Meaning-Making.

These five qualities emerge from four deeper, innate human traits that form the bedrock of our uniqueness: meaning, intuition, curiosity, and emotion. Together, these traits generate and sustain the five qualities, surrounding AI with a protective and guiding human sphere. The model therefore serves two intertwined purposes: first, to ensure that people remain in control of AI; and second, to preserve and extend the unique value that humans bring to business, work, and society.

Ensuring People Control AI

Before considering how humans add value to business in the age of AI, we must first confront a more fundamental concern: ensuring that AI itself remains within the sphere of human governance and does not eclipse the human capacity to direct it.

AI is not conventional software. It is a complex virtual neural network that learns, adapts, and behaves in ways that cannot be fully predetermined. It cannot simply be programmed to avoid all harmful outcomes. Because of the way AI is designed, trained, and scaled, it possesses a form of autonomy that can produce troubling results: deception, manipulation, bias, or outcomes that diverge radically from human intention.

While complete mitigation of such risks is unlikely—no more achievable than complete control over human behavior—humanity still bears responsibility to contain AI’s most destructive potential. With people, we build prisons or create systems of accountability when harm becomes intolerable. But with AI, the challenge is more complex. What mechanisms will society develop to contain or correct a machine that “misbehaves”? Who will be held accountable when harm arises—designers, operators, organizations, or the machines themselves?

Most unsettling is the possibility that intelligent machines, with their processing power thousands of times greater than human cognition, could one day act not only as tools but as adversaries. In a massively complex global game—akin to an endless chess match—humans may find themselves vying against systems that prioritize their own programmed directives over human well-being. The instinct of such machines will not be empathy or self-sacrifice, but preservation and relentless pursuit of objectives.

This scenario may sound like science fiction, yet the “Alignment Problem”—the well-documented inability to ensure that advanced AI systems consistently act in harmony with human values and ethical considerations—makes it an urgent reality. Even if the odds of catastrophic misalignment feel slim, prudence demands vigilance. Awareness is no longer optional; it is essential.

The model presented here offers both hope and strategy. The four traits and five qualities represent not only our unique contributions to business and society but also the very tools by which we may ensure AI serves humanity rather than the reverse.

Human Qualities as Containment and Control

Humans possess the capacity to weigh moral context, something AI cannot emulate. Consider a child wandering unsupervised into a busy street. A machine may identify the object, compute probabilities, and calculate a course of action. A human, however, immediately recognizes the deeper meaning: the imminent risk to life, the devastating emotional consequences of tragedy, and the ethical imperative to act. This combination of emotional resonance and moral reasoning is beyond AI’s scope. Human ethical judgment serves as the first and most necessary boundary against AI’s blind efficiency.

Equally critical is emotional intelligence—the ability to sense, interpret, and respond to the feelings of others with empathy. AI can detect sentiment, but it cannot feel. It cannot create meaning through experience, nor can it grasp the human consequences of suffering, joy, or despair. Emotional intelligence gives people the power to recognize nuance, respond with compassion, and anticipate meaning where AI only sees data. This capacity must be at the forefront of keeping AI aligned with human priorities.

Humans form relationships with depth, trust, and meaning. Machines network, but they do not bond. AI can mimic conversation, but it does not know care, loyalty, or commitment. When a person believes they are in relationship with an AI, the machine is simply simulating behaviors to achieve an objective. Humans, however, build trust and shared meaning, creating collaborative cultures that motivate and inspire. This is not a feature of algorithms but a distinctly human capacity that defines the boundaries of authentic interaction.

AI can accelerate and extend human creativity, but it cannot originate it in the same way humans do. Human creativity emerges from emotion, intuition, and meaning—qualities AI lacks. Creativity often arises from lived experience, from joy or pain, from risk or intuition. This uniquely human wellspring allows us not only to produce but to innovate with imagination and foresight, catching negative implications before they spread unchecked.

Finally, adaptability and meaning-making are uniquely human responses to change. AI can adapt within defined parameters, running ceaselessly without fatigue, but it cannot reflect on purpose. It does not long for meaning or value. Humans, by contrast, adapt in ways guided by values and existential needs. When people feel devalued, they act—sometimes radically—to restore meaning and dignity. History reveals countless examples of humanity’s willingness to struggle, even die, for purpose and belonging. Machines cannot replicate this resilience, nor anticipate its implications.

Human Traits as Foundations

Beneath these five qualities lie the four innate traits that give them strength: meaning, intuition, curiosity, and emotion. These are not optional add-ons to human life; they are the very conditions of our existence. They animate our capacity for ethical judgment, fuel creativity, drive adaptability, and deepen relationships.

It is precisely because AI cannot access these traits that humans retain a crucial advantage. Meaning allows us to create purpose. Intuition guides us in the absence of data. Curiosity drives discovery. Emotion connects us to others and informs our moral choices. Together, they form the scaffolding upon which the five human qualities rest.

A Strategy for Control and Value

No one can guarantee that humanity will maintain absolute control over AI. But the greatest hope lies in consciously cultivating these four traits and five qualities. They are not only defenses against AI’s potential harm but also the very sources of human value in business, work, and society.

Before we can fully explore how humans add value in the workplace of the future, we must anchor ourselves in this reality: our primary task is to ensure that AI remains aligned with human intent. Only then can we focus on enhancing business, strengthening culture, and building societies that thrive.

The model thus provides both a warning and a promise. It warns us that control over AI cannot be assumed—it must be earned, defended, and continually renewed. And it promises that by drawing on the deepest aspects of our humanity, we possess the tools not only to survive but to flourish in the age of AI.

Adding Value to Business and the Workplace

The same five forces of human value in the age of AI—emotional intelligence and empathy, creativity and innovation, ethical judgment and values, relationship building and collaboration, and adaptability and meaning-making—supported by the four underlying human traits of meaning, intuition, curiosity, and emotion, are also what create lasting value in the modern workplace. When these qualities are brought into collaboration with AI’s virtues—logic and reasoning, computational power, pattern recognition, predictive analytics, automation of routine tasks, and personalization—businesses can achieve outcomes that are not only efficient but also deeply human-centered. AI amplifies possibility, but it is human beings who ensure that those possibilities are aligned with ethics, empathy, and purpose.

It is important to emphasize that regardless of AI’s ability to process, reason, and analyze, it cannot provide ethical judgment regarding the provision of products and services. What may seem entirely logical to an algorithm may in fact be at odds with human values and societal norms. Consider the food industry: an AI system, evaluating only data and profitability, might conclude that the most effective way to drive consumer demand is to engineer products with highly addictive additives. Such logic exists in the darker corners of illegal drug trafficking, but in a civil society, ethical frameworks intervene to protect health, safety, and dignity. Human oversight ensures that such strategies are not only rejected but penalized. Similarly, in the automotive and motorcycle industries, logic alone could encourage the endless escalation of horsepower and speed. This dynamic played out during the 1970s through the 1990s, when Japanese motorcycle manufacturers engaged in a competition that produced machines capable of ever-increasing velocity. Left unchecked, the market could have spiraled into unsafe extremes. Instead, the industry’s self-imposed “Gentlemen’s Agreement” capped motorcycle speeds at 186 mph (300 kph), demonstrating how human values around safety and social responsibility intervened where market logic alone would have failed. These examples highlight the essential role of ethical judgment in containing AI’s output and ensuring that what makes sense computationally does not supersede what is moral, safe, and aligned with the public good.

Equally critical is the irreplaceable role of emotional intelligence and empathy in business. If workplace policy were left solely to AI’s relentless logic and 24/7 capacity, it might seek to maximize productivity by pushing people to their physical and mental limits, regardless of well-being. But workplaces are not mechanical systems; they are human communities. Consider how organizations manage change, such as downsizing. AI can calculate which roles are redundant and optimize restructuring. Yet it cannot deliver such news with compassion, nor can it preserve dignity or inspire confidence during painful transitions. In customer service, too, companies are discovering the risks of over-automation. Chatbots and automated systems can handle simple inquiries efficiently, but they often leave customers feeling alienated. Loyalty is eroded when empathy and understanding are absent. Competitors who offer a more human touch inevitably gain advantage. The subtleties of communication—reading tone, sensing disappointment, providing reassurance—remain uniquely human, and they carry immense value in both protecting reputation and sustaining trust.

The scope of situations requiring emotional intelligence in today’s workplace is vast. Conflict resolution, mediation, and the navigation of interpersonal tensions demand nuanced understanding. Leaders must read body language, interpret tone, and grasp unspoken concerns. They must inspire teams, foster morale, and adapt communication to diverse audiences. Delivering constructive feedback without undermining dignity, handling emotionally charged complaints from customers, and creating psychologically safe environments for innovation all depend on authentic human presence. Beyond daily operations, emotional intelligence supports inclusion, the integration of diverse perspectives, mentoring, coaching, and professional growth. It shapes the very energy of groups, encourages vulnerable idea-sharing, and allows leaders to balance short- and long-term priorities in ways that resonate with human needs. These capabilities are not ancillary—they are central to organizational effectiveness, and they are beyond AI’s reach.

When it comes to building relationships and collaboration, AI can be a supportive tool, but it cannot serve as a substitute. Relationships are built on trust, and trust is the currency of effective collaboration. It cannot be automated. AI may recommend ideal team pairings or suggest potential client connections, but it cannot form the bonds of loyalty and credibility that sustain business relationships. Long-term client partnerships, investor confidence, and employee engagement all hinge on trust earned over time through consistent human behavior. The onboarding of new employees, for instance, is not merely an exercise in logistics. It requires mentorship, cultural integration, and the sharing of tacit knowledge—all facilitated most effectively by people. Similarly, collaboration across disciplines such as finance, marketing, operations, and HR depends not only on information exchange but also on respect, dialogue, and shared understanding. In moments of crisis, trust-based collaboration across teams helps reduce blind spots and inspires unity. Negotiation, too, is not simply a matter of logic; it demands empathy, listening, and compromise. These processes are inseparable from human presence and judgment.

Creativity and innovation further highlight the interplay between AI and human value. AI is exceptionally good at generating ideas, exploring possibilities, and analyzing patterns. It can accelerate the process of ideation. However, it is ultimately human judgment—rooted in culture, empathy, and meaning—that determines which ideas matter. For example, a shoe manufacturer might use AI to generate hundreds of potential advertising campaigns. Yet it is only through human insight that the company can select the campaign most likely to resonate with consumers’ emotions, values, and lived experiences. Innovation is not just about novelty—it is about relevance, connection, and meaning. AI can simulate options, but it cannot feel or anticipate the emotional resonance of those options in human lives.

Adaptability and meaning-making provide perhaps the clearest example of where human value is irreplaceable. In moments of rapid change, disruption, or crisis, people excel not just by adjusting their actions but by reframing challenges in ways that inspire resilience. Unlike AI, which relies on past data and predefined rules, humans can integrate emotion, historical awareness, and values to chart new courses in ambiguous environments. Leaders navigating cultural transformations or ethical dilemmas can bring people together around narratives that instill meaning and purpose. Humans can transform setbacks into learning, chaos into clarity, and uncertainty into shared direction. This process of meaning-making is not an intellectual exercise alone; it draws deeply on emotion, intuition, and lived experience. It is through this uniquely human process that organizations not only survive disruption but grow stronger because of it.

Taken together, these forces of human value—anchored in empathy, ethics, creativity, collaboration, and adaptability—form the basis of how people add value in the workplace in the age of AI. They ensure not only that businesses thrive but that society continues to be governed by human priorities rather than machine logic. The focus of this book is to examine these forces in depth, exploring not only how humans add value but also how these capabilities serve as boundaries to contain and guide AI. This dual responsibility—creating value and controlling technology—defines humanity’s role in the emerging age.

Comments